Shared Awareness: A Better Way to Manage Comment Trolls

If we understand what motivates trolls, we can manage their disruptions by removing their incentive to comment.

By Ryan McGreal.

2077 words. Approximately a 6 to 13 minute read.

Posted April 15, 2010 in Blog.

Contents

1 Trolling ↑

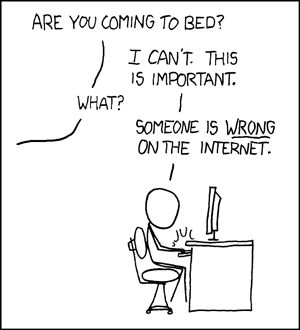

As everyone who has spent more than five minutes on the internet knows, in the absence of personal accountability, some people inevitably turn into assholes.

John Gabriel's Greater Internet Dickwad Theory

Not many, mind you, but even one determined troll on an internet forum can be enough to bring the entire affair crashing down.

The term "troll" comes originally from the fishing method of dangling a shiny lure out the back of a boat while putting along just quickly enough to grab the interest of a fish. The fish that can't resist the lure is snared on the hook and eaten.

(Of course, the term also connotes ugly, hairy brutes who lurk under bridges waiting to snatch unwary passers-by.)

Trolls posting anonymously on message boards absolutely love to provoke outrage. The troll's objective is not to change anyone's mind; rather, it's to write in such a way as to elicit a sequence of increasingly angry, frustrated replies that drag the discussion further and further off-topic until the original thread is in shambles.

Crude trolls subsist on the meager attention given to their vulgarity; but sophisticated trolls can keep a debate going for days by dancing on the fine line between feigned reasonableness and deliberate obtusity. They are by far the more damaging to online discourse.

What makes trolls disruptive is not the trollish comments themselves, but the chain of outraged replies they manage to elicit.

Successfully managing trolls requires understanding why this happens and breaking the chain of replies.

2 Shared Awareness ↑

Trolls attract replies by exploiting the general absence of shared awareness on most mediated communications platforms. Shared awareness is a special state of meta-knowledge among collaborators that allows them to act cooperatively on the knowledge.

If I know something and you know something but I don't know that you know it and you don't know that I know it, we can't build on that knowledge even though we both know the same thing.

Shared awareness is not reached until: I know something, you know something, I know that you know, you know that I know, I know that you know that I know, and you know that I know that you know. (Whew!)

Once we all know that we all know the thing, we can then act on it. Without that shared awareness, our ability to put the information to use is sharply curtailed.

Now, sometimes the absence of shared awareness is a blessing. There are many potentially socially awkward situations - say, someone in a room full of people passes wind - in which the absence of shared awareness of who passed wind means everyone can take a position of plausible deniability.

Not only does the culprit avoid embarrassment, but also the other parties don't have to deal with the discomfort of having to address the incident. After all, most people don't like confrontations.

3 Someone is Wrong on the Internet! ↑

In a conventional online comment system, several participants may each know something, but they do not necessarily have shared awareness about it (everyone knows that everyone knows).

When a troll posts a comment, for example, other participants may decide independently that the comment is inappropriate and/or wrong and/or offensive, but they have no way of knowing whether anyone else feels the same way.

A conscientious forum-goer could just ignore the troll and hope for the best, but silence is affirmation, and a comment left to stand unchallenged starts to look like it represents the prevailing view of the forum participants.

Hence, the conscientious forum-goer feels no choice but to reply to the troll, if only to assure others that at least someone else disagrees with it.

Now the fish is hooked, and a skillful troll can keep that fish on the line for a long time.

4 Collateral Damage ↑

In the meantime, other fish get scared away by the thrashing.

Pointless, unending debates between trolls and earnest opponents do two things:

- They disrupt and crowd out legitimate discussion; and

- They deter other potential commenters from getting involved, lest they be trolled as well.

Eventually, the community starts to dissipate altogether. At this point, the troll - really, a parasite (if you'll forgive the switched metaphor) - abandons the dying host and sets off in search of a fresh victim.

The important thing to remember is that the damage takes place not only because the troll posts a comment but also because an earnest commenter decides to reply.

In turn, earnest commenters get into it with trolls because in the absence of shared awareness, they have no other way to know whether the community as a whole accepts or rejects the troll's arguments.

Worst of all, the very dialogue with the troll, undertaken for the purpose of invalidating the troll's position, actually deters others from sharing their own positions and clearing up the matter.

5 Three Points of Attack ↑

The three necessary conditions for a troll disrupting a forum are:

- An absence of shared awareness;

- A troll; and

- A well-meaning opponent to debate with the troll.

It's safe to generalize that any online forum will have both trolls and well-meaning opponents willing to debate with them. Since most online forums also don't have shared awareness, that means all three necessary conditions are likely to be present and disruption is likely to follow.

Since all three conditions are necessary for disruption to take place, we have three points of attack in trying to prevent it:

- Establish shared awareness;

- Block the trolls; or

- Block the well-meaning replies.

Of the three options, #1 is the most promising. If you can establish a shared awareness that the troll is inappropriate - i.e. everyone knows that everyone knows that the troll is not representative - then well-meaning opponents no longer feel obliged to reply.

In turn, since the objective of a troll is to provoke replies, a troll who can't get anyone to take the bait will eventually give up and move on to another forum.

So how do we go about establishing shared awareness on a text-based communications forum?

6 Clear Understanding of Rules ↑

The first step to shared awareness is for everyone on the forum to have a clear understanding of the rules and a clear set of expectations on now to behave and how others should behave. It's not enough just to say of trolling that we know it when we see it

The rules should be simple, straightforward, fair, and agreeable to the members. Ideally, they should participate in determining what the rules will be. That way, the members have a sense of investment and ownership in the policies that govern their actions on the forum.

However, the rules should probably include some variation on the following:

- Maintain a civil and polite tone, even when disagreeing with someone.

- Argue from evidence and cite your sources.

- Do not use rude or insulting language.

- Do not post comments that are needlessly inflammatory, seek to provoke an emotional reaction from others, are attempts to disrupt and derail the discussion, or abuse evidence and reasoning to defend an unjustifiable conclusion.

- Do not post comments that are off-topic.

The important thing is that the participants feel invested in community standards that define them as civil, reasonable and responsible. Again, most people - even on the internet - like to think of themselves in these terms and actually value the personal exchanges they get to have on socially functional online forums.

A pseudonymous screen name might be less personal than a real name, but it still inheres to a real personal identity, and people can still form relationships while communicating on the internet - if their community is successful in establishing and maintaining a shared awareness of their values.

7 Communicate Without Posting a Reply ↑

By themselves, codes of conduct are only useful insofar as people see them being applied fairly and consistently.

For inappropriate comments, there needs to be a mechanism for members of the community to flag them as inappropriate without actually posting replies; and that flag must be visible to everyone so that an individual looking at the comment can see how many other people have already flagged it.

If you have used reddit, Digg, or Hacker News, you may have already have experienced such a mechanism at work. In these three link sharing sites, registered users can up-vote or down-vote both submitted articles and user comments.

While each site works a little bit differently, a given article's or comment's score reflects some combination of the number of upvotes, the number of downvotes, the 'reputations' of the users who assigned upvotes and downvotes, and the amount of time that has passed since the article or comment was posted.

Some sites introduce an additional constraint in that registered users do not gain the ability to downvote comments until after they have first attained a minimum threshold of reputation based on how other users have upvoted and downvoted their articles and comments.

Hacker News also starts fading the text colour of comments with net negative scores. The benefit to this is that it sends a strong visual message about how the community feels about the comment.

8 Remove the Incentive ↑

So what happens after you implement this form of community moderation? Earnest, well-meaning commenters can see for themselves that the community has rejected a troll and hence no longer feel obliged to set the record straight in a reply.

Eventually, the troll gets bored at not being able to elicit any more replies and moves on.

This is the key to successful community moderation: establishing shared awareness removes the incentive to reply to trolls, which in turn removes the incentive to troll.

To borrow a phrase from John Gilmore, the other forum members learn to treat the trolls as damage and route around them. Eventually the trolls give up.

It might take a while to establish shared awareness, but the forum admins must be patient and remain steadfast in the face of what is likely to be frenetic opposition from the trolls before they finally quit.

Have no doubt: the trolls will immediately understand what is happening, and will fight to undermine it with every rhetorical tool at their disposal, accusing you of censorship, tyranny, megalomania and anything else they can think of. They will fling a litany of abuse at you in the hope that some of it will stick.

9 Free and Voluntary ↑

The best way to fend off these attacks is to ensure that the moderation is free, voluntary, and non-censorious. The following guidelines seem to work well:

Regularly reinforce community standards by having the policy displayed prominently on the site and reminding users of their important role in maintaining those standards.

Troll comments can visually represent the negative judgments of their peers - for example by fading in colour or shrinking in size in proportion to the negativity of their score - but they should not disappear completely. (Note: it's reasonable to make an exception for obvious spam and/or libelous content and just delete it.)

Moderation ought to apply directly to comments, not to the users making them. People respond to incentives, and some trolls actually stop trolling and start participating responsibly when their trolls are voted down but their fair posts are voted up.

Similarly, blacklisting trollish users generally doesn't work. As long as it's easy to create sockpuppet accounts with throwaway email addresses, forums behave like badly applied wallpaper: when you push a bubble down, it just pops up somewhere else.

10 Censorship ↑

Finally, a few words on censorship as an alternative method of blocking trolls:

Censorship is a slippery slope. Deciding which comments to delete is ultimately a judgment call, and people who are emotionally invested in the success of a forum are notoriously poor at remaining objective and applying standards consistently.

There's a risk that legitimate comments will get the axe. Even worse, there's a risk that an atmosphere of censorship will deter people from participating - the very problem that moderation was introduced to solve!

Finally, censorship gives the trolls strong rhetorical ammunition to accuse you of heavy-handedness and elicit sympathy from other participants. The perceived lack of transparency over which comments are acceptable puts everyone into an oppositional stance, and the trolls will be watching carefully for examples of hypocrisy.

Both philosophically and practically, censorship is at least as harmful as its targets. It depends naively on the consistent objectivity and benevolence of the moderators, introduces a chilling effect on participants who may hold legitimate beliefs that break with the majority, and arms trolls with a credible claim that their rights are being violated.